A major federal lawsuit accuses Meta of lying, harming the public, and violating parental consent laws

Every U.S. state except for nine (Alabama, Alaska, Arkansas, Iowa, Montana, Nevada, New Mexico, Texas, and Wyoming) is suing Meta, the parent company of Facebook and Instagram. Thirty-three states have joined a federal lawsuit led by California, while eight other states and the District of Columbia are filing separate lawsuits. (Arkansas sued Meta back in March.)

The federal lawsuit opened with a 228-page legal complaint, now unsealed to the public. The core of the complaint is the charge that Meta is hurting children, breaking the law, and lying to the public about it. The suit charges that: (1) Meta claims that they don’t make their products intentionally addictive, but they do; (2) the company claims that the platforms are restricted to ages 13 and below, but in fact they need children under 13 on the platforms to cultivate the next generation of users; (3) Meta claims that social media is good for people, but both external evidence and Meta’s own internal studies have shown that it is harming people; and finally, (4) Meta claims to police illegal and harmful content on its platforms, but it has in fact failed to do so.

The outcome of this lawsuit could have deep implications for U.S. society, so I want to lay out some of the highlights of the legal complaint here.

Intentionally Addictive

The first pillar of the complaint is that, rather than trying to make a product that is healthy and safe, Meta focuses on “maximizing engagement.” Of course, every company wants to maximize consumption of its product. But social media is unusually addictive. The complaint cites Dr. Mark Griffiths, Distinguished Professor of Behavioral Addiction at Nottingham Trent University:

The [dopamine] rewards are what psychologists refer to as variable reinforcement schedules and is one of the main reasons why social media users repeatedly check their screens. Social media sites are “chock-ablock” with unpredictable rewards. Habitual social media users never know if their next message or notification will be the one that makes them feel really good. In short, random rewards keep individuals responding for longer and has been found in other activities such as the playing of slot machines and video games.

Meta is well aware of these findings; the complaint mentions an internal study from 2018 called “Facebook ‘Addiction’,” which acknowledged that the design of the platforms can foster compulsive use. Also, Sean Parker, the former president of Meta, is on record admitting that the underlying question in designing the systems was, “[h]ow do we consume as much of your time and conscious attention as possible?” He goes on to say:

It’s a social-validation feedback loop . . . exactly the kind of thing that a hacker like myself would come up with, because you’re exploiting a vulnerability in human psychology. The inventors, creators—me, Mark [Zuckerberg], Kevin Systrom on Instagram, all of these people—understood this consciously. And we did it anyway.

However, Meta denies to the public that its platforms are designed to be addictive. The plaintiffs point out that Meta informed the BBC that “at no stage does wanting something to be addictive factor into” Meta’s product designs. Likewise, a Meta executive told reporters that Meta “do[es] not optimize [its] systems to increase amount of time spent in News Feed.” Mark Zuckerberg made a similar assertion to Congress in 2021.

Meanwhile, the company appears to work hard to make its products addictive. The complaint accuses Meta of utilizing several specific techniques designed to be addicting, such as the “infinite scroll” feature and “vanishing stories,” which disappear if you don’t get to them soon enough.

The plaintiffs note that Meta actually piloted a version of Instagram called “Pure Daisy” which hid counts of “Likes” from users. But because this version decreased engagement, Meta did not implement it—and then falsely claimed it had abandoned it for another reason.

Targeting Youth

Of course, companies have always been allowed to sell products of varying degrees of “addictiveness,” and it’s up to people to decide whether or not to consume them. However, many of those products are illegal for minors. The second pillar of the case against Meta is that the company is intentionally targeting minors, not bothering to get parental consent, not really trying to keep children under the legal minimum age of 13 off the platform, and all the while lying to the public about it.

The plaintiffs cite an email from a Meta product designer: “You want to bring people to your service young and early.” Another internal document states that the 13- and 14-year old demographic is supposed to be the future of the platform.

Furthermore, the company is well aware of just how hooked teens have become; internal documents say that hundreds of thousands of teenagers spend more than five hours a day on Instagram. And they want more; an internal email in 2019 stated that a “stretch goal” for the company was two million hours of teen watch time on Instagram videos.

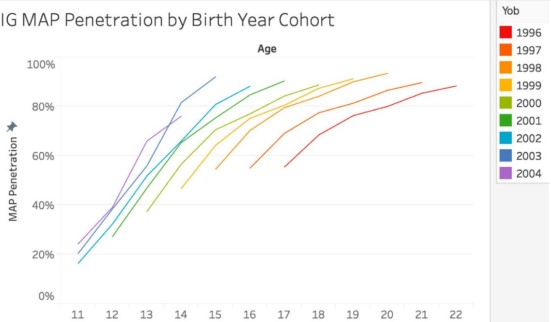

It’s not just teens. Meta is also actively targeting children under the official minimum age of 13. The complaint shows that Meta is fully aware that users can simply lie about their age when they sign up, and that they know that as a result, millions of Instagram users are under 13.

The 13-year-old age minimum is legally significant. The complaint accuses Meta of breaking the law by violating the Children’s Online Privacy Protection Act of 1998 (COPPA), which requires companies to obtain informed consent from parents before they collect personal information from children under 13 on the internet. Meta collects personal information from users under 13 without even trying to get parental consent. But they publicly deny that children under 13 are allowed to use the platforms.

Bad for Mental Health

Finally, having established that Meta is intentionally trying to addict children and teenagers to its product, while claiming that it is not, the plaintiffs try to establish that this is harming young users. This is an easy enough case to make, because there is a growing body of evidence that the downward trends in mental health that began around the start of the last decade are directly attributable to the spread of smartphones and social media. The complaint relies largely on the work of social scientists Jonathan Haidt and Jean Twenge, among others.

The plaintiffs write that although research shows a clear correlation between poor mental health and social media use (more so than for TV or video games, in fact) Mark Zuckerberg has refused to admit this—despite internal Meta research that says so, and despite outside researchers who have told him the same thing. Zuckerberg has instead testified to Congress that research shows social media is good for users’ well-being.

Harmful Content

All of these problems are intrinsic to social media use, before you even get to “harmful content.” Regarding harmful content, the plaintiffs write:

Meta’s Recommendation Algorithms are optimized to promote user engagement. Serving harmful or disturbing content has been shown to keep young users on the Platforms longer. Accordingly, the Recommendation Algorithms predictably and routinely present young users with psychologically and emotionally distressing content that induces them to spend increased time on the Social Media Platforms. And, once a user has interacted with such harmful content, the Recommendation Algorithm feeds that user additional similar content.

According to the complaint, internal research has revealed that the algorithms have a tendency to lead people into “rabbit holes” of negative content related to such as anorexia, body dysmorphia, self-harm, and suicide. But Meta has denied this publicly, stating, “we do not amplify extreme content.” The algorithms also seem to favor sexual content, and sexual harassment is common; in a recent internal survey, 13 percent of 13- to 15-year-old users reported that they had experienced unwanted sexual advances on Instagram in the last week alone.

For more evidence that Meta is aware of these problems, the complaint cites a 2021 email that Arturo Bejar, the former Director of Site Integrity for Meta, sent to Zuckerberg and other executives expressing his concern about Meta’s failure to enforce its Community Standards. (The Wall Street Journal recently ran a feature on Arturo Bejar, who campaigned for more effective policing of content after discovering the sexual harassment his own 14-year-old daughter was getting on Instagram.) His concerns have not been addressed.

The End of an Era?

Activists are talking about “treating social media like Big Tobacco.” That’s what these 43 attorney generals are trying to do—in fact, they make the comparison explicit in the complaint.

Cracking down on social media may seem crazy now, but perhaps no crazier than cracking down on tobacco might have seemed in the mid twentieth century. Whatever happens, it’s hard to overstate just how deeply the outcomes of this case and others like it could shape society. The upcoming generation of children will run the world soon. How they run it will depend upon the state of their minds and souls, and that will be significantly affected by the extent to which social media companies like Meta are allowed to poison and enslave them.

Further Reading

- Lawsuits Target Meta for Exploiting Girls

- Algorithmic Erotica

- How Instagram Recruits Girls to Sexualized Labor

- How Sex Traffickers Use Social Media And Modeling

Daniel Witt (BS Ecology, BA History) is a writer and English teacher living in Amman, Jordan. He enjoys playing the mandolin, reading weird books, and foraging for edible plants.

• Get SALVO blog posts in your inbox! Copyright © 2024 Salvo | www.salvomag.com https://salvomag.com/post/will-social-media-go-the-way-of-cigarettes